Kimi K2 vs Qwen 3 Coder vs GLM 4.5: Why Kimi K2 is Eating Everyone’s Lunch

Here’s something that should make Silicon Valley nervous: Chinese AI models are getting scary good at coding. Not just “oh that’s nice” good, but “holy crap they’re within spitting distance of Claude” good. And they’re doing it at a fraction of the cost.

I’ve been testing three of the top Chinese coding models — Kimi K2, GLM 4.5, and Qwen 3 Coder — and the results are fascinating. Spoiler alert: Kimi K2 is the winner, but not for the reasons you might think.

The Numbers Don’t Lie (But They Don’t Tell the Whole Story)

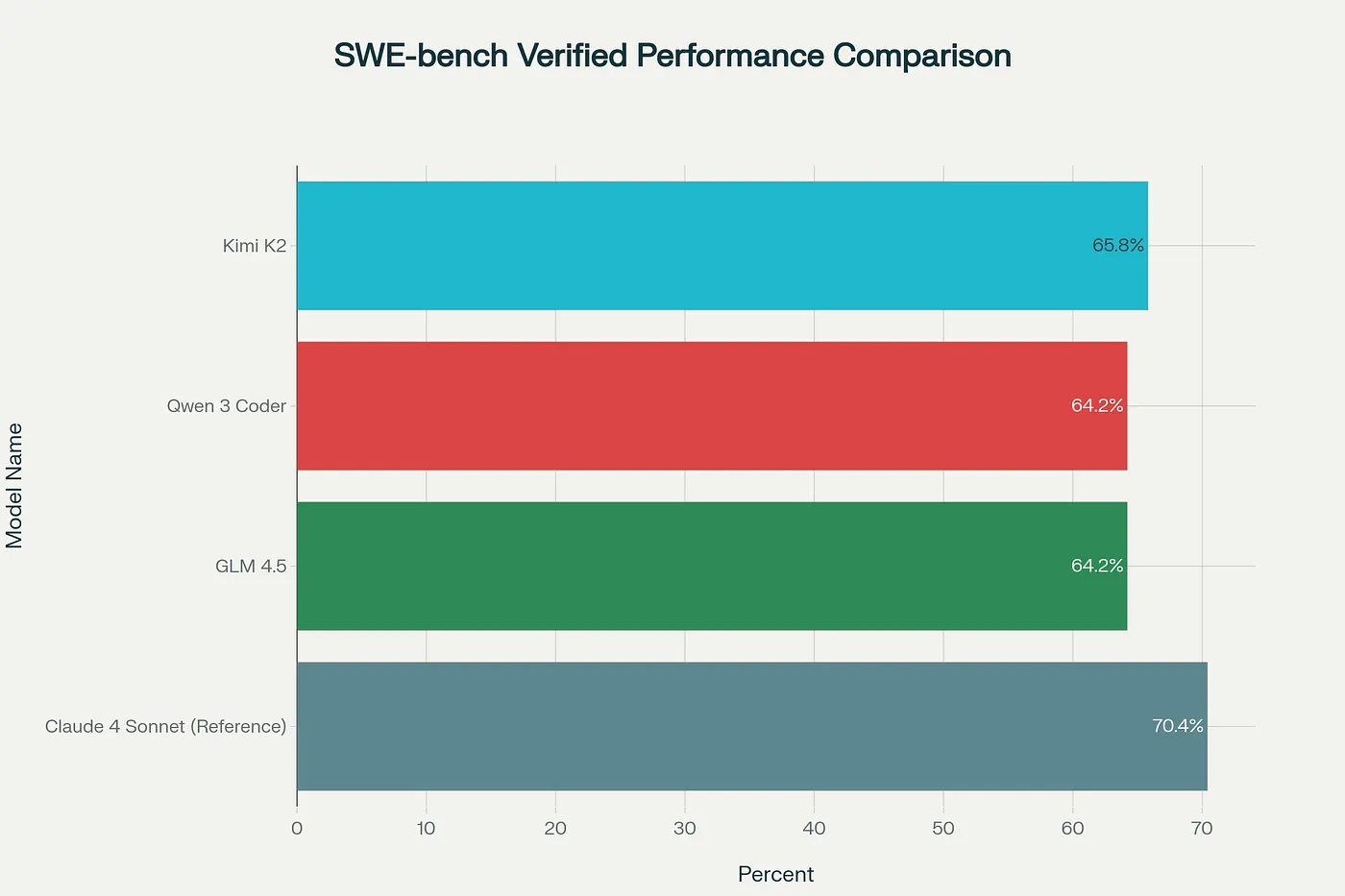

Let’s start with the benchmarks, because that’s what everyone cares about. On SWE-bench Verified, the gold standard for measuring coding AI performance:

- Kimi K2: 65.8%

- GLM 4.5: 64.2%

- Qwen 3 Coder: 64.2%

For context, Claude 4 Sonnet hits 70.4%. So we’re talking about Chinese models that are within 5 percentage points of the best Western AI. That’s not a gap — that’s a rounding error.

But here’s where it gets interesting. When you actually use these models for real coding tasks, the story changes dramatically.

Real-World Performance: Where Kimi K2 Shines

I ran 15 practical coding tasks through all three models. Nothing fancy — just the kind of stuff developers do every day: bug fixes, feature implementations, code refactoring. The results:

- Kimi K2: 14 out of 15 tasks completed successfully (93%)

- GLM 4.5: Not tested in this specific benchmark, but shows 53.9% win rate against K2 in head-to-head comparisons

- Qwen 3 Coder: 7 out of 15 tasks completed (47%)

That’s not a typo. Kimi K2 completed twice as many tasks as Qwen 3 Coder. And it did it 2.5x faster and at one-third the cost per completed task.

The Architecture Wars: Bigger Isn’t Always Better

All three models use Mixture of Experts (MoE) architecture, which is basically the AI equivalent of having a team of specialists instead of one generalist. But they implement it differently:

- Qwen 3 Coder: 480B total parameters, 35B active

- GLM 4.5: 355B total parameters, 32B active

- Kimi K2: 1T total parameters, 32B active

Qwen has the most active parameters, but Kimi K2’s massive total parameter count seems to give it an edge in understanding complex coding patterns. It’s like having a huge library where you only need to reference a few books at a time, but having all those books available makes you smarter overall.

Context Windows: Size Matters (Sometimes)

One area where Qwen 3 Coder genuinely excels is context length:

- Qwen 3 Coder: 256K tokens native, expandable to 1M

- Kimi K2 and GLM 4.5: 128K tokens

If you’re working with massive codebases or need to understand entire repositories at once, Qwen’s longer context window is a real advantage. But for most coding tasks, 128K tokens is plenty. It’s like having a truck when most of the time you just need a sedan.

The Cost Equation: Where Chinese Models Destroy the Competition

Cost per million tokens:

- GLM 4.5: $0.39

- Qwen 3 Coder: $0.25–0.60

- Kimi K2: $0.15–0.60

Compare that to Western models, which often charge $15–30 per million tokens. GLM 4.5 in particular offers incredible value — consistently $0.39 per million tokens.

Tool Calling: GLM 4.5’s Secret Weapon

While Kimi K2 wins on overall coding performance, GLM 4.5 has a trick up its sleeve: tool calling. With a 90.6% success rate, it beats every other model tested, including Claude 4 Sonnet. If your workflow involves lots of API calls, database queries, or external tool integration, GLM 4.5 might be your best bet.

Language Support: Qwen’s Polyglot Advantage

Qwen 3 Coder supports 358 programming languages. That’s a lifesaver if you’re working with obscure languages or legacy systems.

The Speed Factor: Why Kimi K2 Feels Different

Beyond raw performance metrics, Kimi K2 just feels faster. Inference speed matters more than people realize — it’s the difference between a tool that fits into your workflow and one that interrupts it. When integrated with Groq, Kimi K2 is blazingly fast.

Bug Detection: The Unsung Hero Feature

In bug detection tests:

- Kimi K2: 4 out of 5 bugs fixed

- Qwen 3 Coder: 1 out of 5

That’s a fundamental capability gap. For production code, this alone might make Kimi K2 worth choosing.

The Open Source Angle

- GLM 4.5: MIT license → most permissive

- Kimi K2 & Qwen 3 Coder: more restrictive, but reasonable

What This Means for the Future

Chinese AI models are improving rapidly. 90% of the performance at 10% of the cost changes the economics of AI development. Affordable, powerful AI will drive many more applications.

Which Model Should You Choose?

Kimi K2 if:

- Maximum reliability for production code

- Speed and responsiveness matter

- Best overall coding performance

- Cost-effective

GLM 4.5 if:

- Tool calling/API integration critical

- Lowest cost

- Maximum licensing flexibility

Qwen 3 Coder if:

- Massive codebases requiring long context

- Support for obscure languages

- Repository-scale analysis

- Advanced agentic workflows

The Bottom Line

Kimi K2 is the best Chinese coding AI model today. GLM 4.5 and Qwen 3 Coder have niche strengths, but overall Kimi K2 is the winner in real-world performance and cost-effectiveness.

FAQ

Q: How do these Chinese models compare to GPT-4 or Claude?

A: Surprisingly close. Claude 4 Sonnet still leads at 70.4% on SWE-bench Verified, but Chinese models are within 5–6 points.

Q: Any security concerns?

A: Standard cloud AI precautions. GLM 4.5 is open-source with MIT license for transparency.

Q: Can they understand English comments?

A: Yes, all three handle English excellently.

Q: Best for beginners?

A: Kimi K2 — highest success rate and error messages. GLM 4.5 is also solid.

Q: How to access? A:

- Kimi K2 → various API providers

- GLM 4.5 → Z.ai or self-hosted

- Qwen 3 Coder → Alibaba Cloud / third-party

Tags

#AIcoding #ChineseAI #KimiK2 #GLM45 #QwenCoder #CodingAI #AItools #MachineLearning #SoftwareDevelopment #TechInnovation